Scaling up in AI involves increasing the size and power of AI models to improve their performance. This process relies on vast amounts of training data, a growing number of model parameters, and significant computational resources. While it has led to impressive advancements, it also brings challenges like environmental impact, accessibility, and ethical concerns.

Hello friends, hope you are doing well!

In recent years, the advancements in artificial intelligence (AI) have been nothing short of remarkable. A significant factor driving these advancements is the concept of “scaling up“. Scaling up means making AI bigger and more powerful so that it performs better. It enables AI to perform complex tasks such as solving math problems, writing software, and creating realistic images and videos. Although AI has been around since the 1950s, the speed of improvement has skyrocketed since 2010. In this post, we will discuss the key components driving scaling, key stats, and its impact on the future. So, let’s begin.

Key Components of Scaling

Training Data

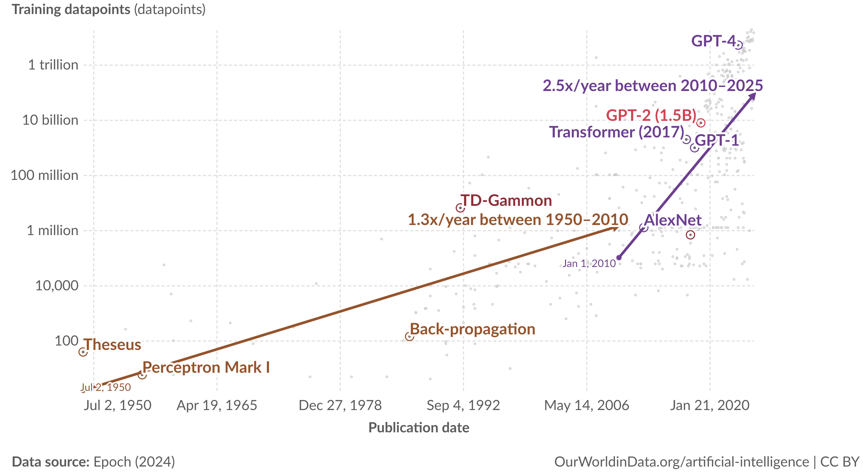

Modern AI models rely on vast amounts of training data. Feeding the model with larger and more diverse data improves its generalization, learning capacity, and enables it to make better predictions. The volume of data used for training has grown exponentially since 2010, doubling approximately every nine to ten months. It’s even faster for LLMs, tripling in size each year. For instance, GPT-2 was trained on nearly 4 billion tokens, while GPT-4 was trained on nearly 13 trillion tokens.

Model Parameters

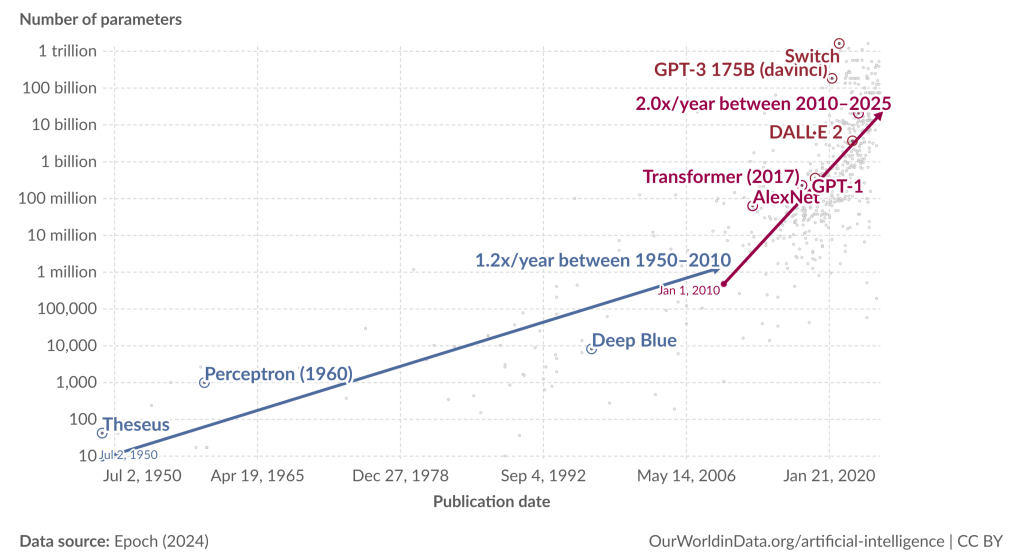

In AI, a parameter is a value within a model that gets adjusted during training to help the model make better predictions. Think of them as settings or controls that AI fine-tunes to learn patterns in the data. The number of parameters in AI models has skyrocketed, doubling every year since 2010. For example, GPT-3 has 175 billion parameters, while GPT-4 is believed to contain around 1 trillion parameters. The increase in parameters allows AI models to capture more complicated patterns and relationships within the data, making them more powerful and capable.

Compute

The computational resources required for AI training have increased exponentially. Compute is typically measured in total floating-point operations (FLOPs), where each FLOP represents a single arithmetic calculation like addition or multiplication. The surge in computational power enables researchers to train larger and more complex models, which in turn leads to significant improvements in AI performance. Compute has doubled approximately every six months since 2010. The most compute-intensive model reached 50 billion petaFLOPs.

Challenges and Considerations

While the scaling approach has driven remarkable advancements, it also presents several challenges and considerations. One significant challenge is the environmental impact of training large AI models. The computational resources required for these models consume substantial amounts of energy, contributing to carbon emissions. GPT-3 is estimated to consume 1,287 MWh of electricity during training, which can power an average household for over 120 years. Researchers and companies are increasingly aware of this issue and are exploring ways to make AI training more energy-efficient.

Another consideration is the accessibility of AI technology. As models become larger and more complex, the cost of training and deploying them can become prohibitive for smaller organizations and independent researchers. This raises concerns about the democratization of AI and the potential for a concentration of power among a few large entities.

Ethical considerations also come to the forefront with the scaling of AI. The ability of AI models to generate realistic content, for example, raises questions about misinformation and the potential for misuse. Ensuring that AI is developed and used responsibly is a priority for the community, and ongoing efforts are being made to address these ethical challenges.

Looking ahead

The future of AI is undoubtedly exciting, with scaling up continuing to be a key driver of progress. As we look ahead, several trends are likely to shape the trajectory of AI development.

Investment and Hardware

Companies are investing heavily in AI, and hardware like GPUs is becoming cheaper and more powerful. This trend is expected to continue, driving further advancements in AI. The combination of increased investment and more affordable hardware ensures that researchers have the resources they need to push the boundaries of what AI can achieve.

Unexpected Capabilities

Scaling up AI models can lead to the development of new, unexpected abilities. As models become larger and more sophisticated, they often exhibit emergent behaviors that were not explicitly programmed. These capabilities can include improved problem-solving skills, better natural language understanding, and the ability to generate highly realistic content.

Green AI

As models grow, the AI community is increasingly concerned about sustainability. In the future, we may see renewable energy for data centers, the development of low-energy training techniques, and the promotion of carbon-neutral AI practices.

Scaling up has proven to be a powerful strategy in advancing AI capabilities. As we continue to increase inputs, we can expect AI to become even more capable and versatile, opening up new possibilities and applications. The ongoing commitment to scaling up, combined with continued investment and innovation, will likely lead to even more groundbreaking developments in the world of artificial intelligence.

So that was all in this post. I will see you soon with some other exciting post. I would love to hear your thoughts, so please leave a comment!